Leveraging Examples For Clarity

How can we assess model reliability, discern its strengths & limitations, and identify specific areas for

targeted data and feature adjustments?

What

Example-based explanations utilize specific instances or what-if scenarios to illustrate the model’s decision-making process (Molnar, 2023).

How

Molnar describes several types of example-based explanations within Interpretable Machine Learning.

Adversarial Examples

These can be crafted by utilizing counterfactuals to learn how to introduce minor modifications to the input data that lead to the model making errors. Adversarial examples expose model vulnerabilities and security vulnerabilities to be patched in order to enhance resilience.

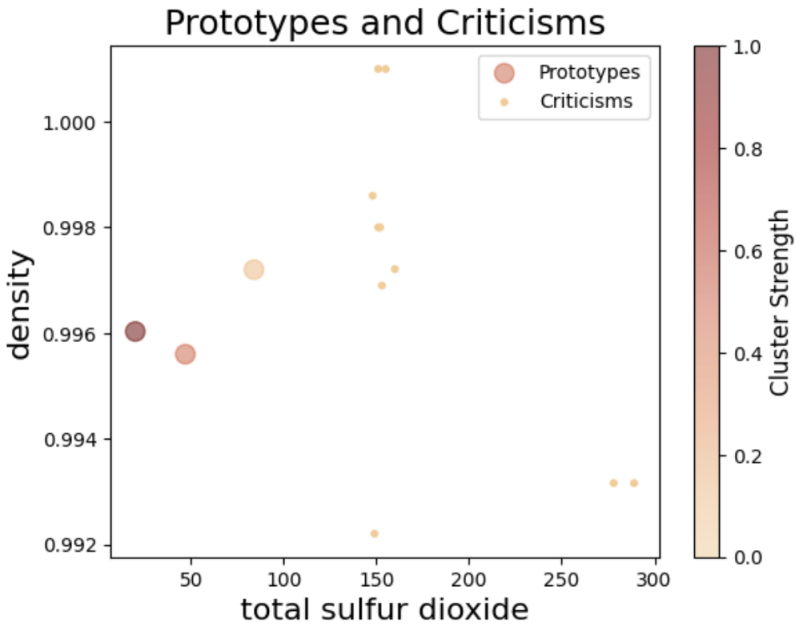

Prototypes & Criticisms

Prototypes, actual examples drawn from the center of dense clusters of observations, illuminate which regions of the feature space the model has good coverage over.

Criticisms are observations that are not near any prototypes, indicating potential areas of poor model performance due to inadequate data representation.

Influential Instances

Some data points play a larger role in shaping the model. Identifying these instances helps us see what data is steering the model’s learning. Calculating the influence of each instance can be incredibly expensive, depending on which technique is used (e.g. if using deletion diagnostics, which trains a new model for a dataset minus the observation of interest). Influence functions can be used to approximate the influence of instances, but it can only be used for certain types of models.

Image created by Ari Tal using an iPython Notebook, 2024

When

Each type of example-based explanation caters to specific needs:

- Adversarial examples: These can be used for security assessments, testing the model’s reliability under varied conditions (Molnar, 2023).

- Prototypes and criticisms: Assess data coverage prior to model training to detect potential data-driven biases.

- Influential instances: These can be used to trace what training data shaped the model's learning and thus its behavior. This can potentially help with debugging data-related causes for known model biases and other model issues.

Potential Impacts

Example-based explanations offer a tangible glimpse into the model's decision-making, providing an intuitive understanding of the model's behavior. They are invaluable for identifying biases, validating model robustness, and ensuring AI decisions are understandable and justifiable. That said, the explanations serve slightly different purpose:

- Adversarial Examples: Identify points of model failure and thus provide clarity on how to make the model more robust and reliable (Molnar, 2023).

- Prototypes, criticisms, and influential instances: Assess the model's strengths, limitations, and biases with respect to areas of the feature space (Molnar, 2023). As such they direct focus to areas needing data augmentation or feature refinement to bolster model performance and equity.